Benchmarks

In this section we will run simple, easy to understand, mini benchmarks to find out which template parser renders faster. Keep in mind that speed is not always the case, you have to choose wisely the template parser to use because their features varies and depending your application's needs you may be forced to use an under-performant parser instead.

We will compare the following 7 template engines/parsers:

Each benchmark test consists of a template + layout + partial + template data (map). They all have the same response amount of total bytes. Ace and Pug parsers minifies the template before render, therefore, all other template file's contents are minified too (no new lines and spaces between html blocks).

The Benchmark Code for each one of the template engines can be found at: https://github.com/kataras/iris/tree/main/_benchmarks/view.

System

Processor

12th Gen Intel(R) Core(TM) i7-12700H

RAM

15.68 GB

OS

Microsoft Windows 11 Pro

v1.2.4

go1.20.2

v19.5.0

Terminology

Name is the name of the framework(or router) used under a particular test.

Reqs/sec is the avg number of total requests could be processed per second (the higher the better).

Latency is the amount of time it takes from when a request is made by the client to the time it takes for the response to get back to that client (the smaller the better).

Throughput is the rate of production or the rate at which data are transferred (the higher the better, it depends from response length (body + headers).

Time To Complete is the total time (in seconds) the test completed (the smaller the better).

Results

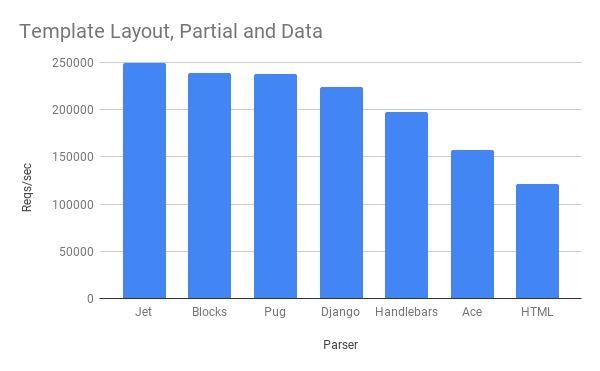

Test:Template Layout, Partial and Data

📖 Fires 1000000 requests with 125 concurrent clients. It receives HTML response. The server handler sets some template data and renders a template file which consists of a layout and a partial footer.

Last updated: Mar 17, 2023 at 10:31am (UTC)

As we've seen above, Jet is the fastest and HTML is the slowest one.

Last updated

Was this helpful?